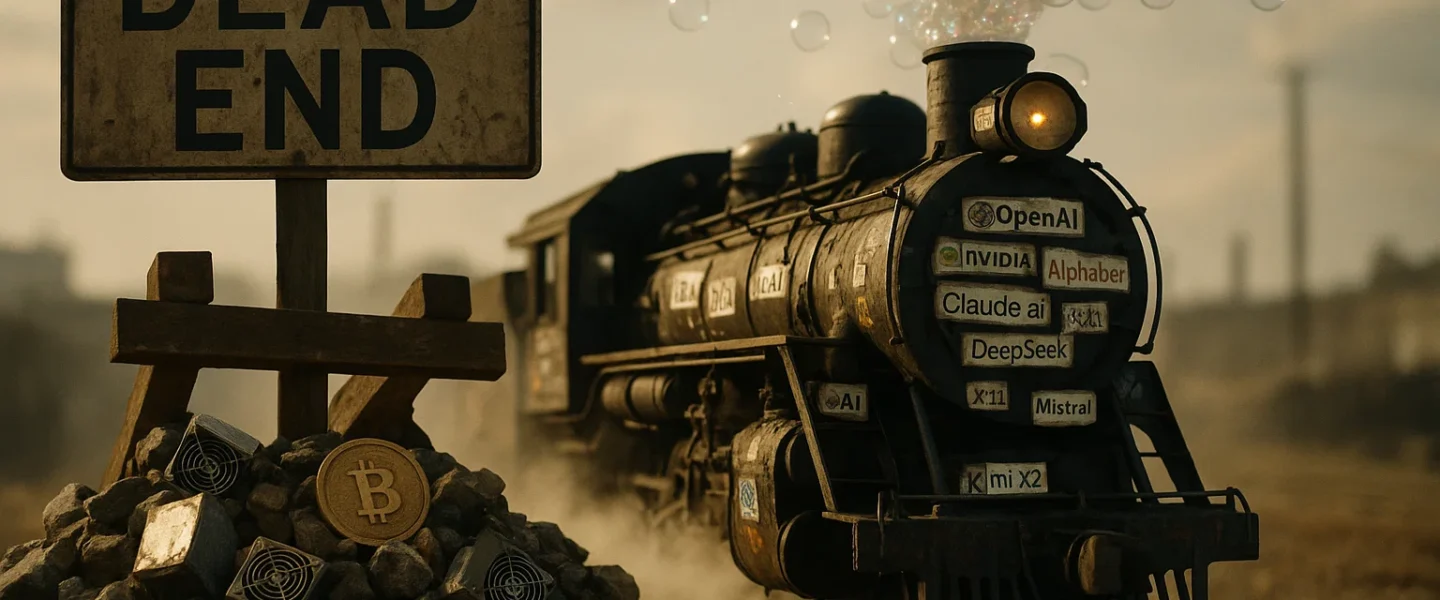

Another day, another article telling us the AI boom is just like the railroad buildout of the 1800s. “Don’t worry about the bubble—infrastructure always finds its users eventually!”

This is dangerously wrong. Here’s why:

The Fundamental Flaws

The railroad analogy isn’t wrong about everything. Both AI and railroads are foundational technologies with messy, bubbly beginnings that will create immense value eventually. But the analogy becomes dangerous when it obscures the qualitative differences in risk and depreciation cycles that matter for investors today.

The durability problem: Railroad ties last 40 years. Fiber conduits: 30. NVIDIA H100s: 3-4 years when CUDA rolls forward. When railroad companies overbuilt, those tracks eventually found users. But AI hardware needs cash-flow breakeven in under 36 months or it’s literally scrap. That’s a qualitatively different risk profile.

The talent problem: The 1999 web stack (TCP/IP, HTML, SQL) is still 80% of today’s stack. The 2019 “AI engineer” stack (TensorFlow 1.x, GPT-2 fine-tuning) is already legacy. Your people depreciate faster than your chips—never true for rails or telecom.

The planning problem: Railroad builders had the luxury of long time horizons. Steam engines, transistors, and LLMs all reached scale by switchbacks – each bend forced by a pump that flooded, a switchboard that jammed, a compiler error at 3 AM. Anyone pitching you a 36-month Gantt chart for “AI transformation” is selling you a flat-earth map.

The Real Pattern

What this actually resembles: The crypto boom. Both driven by:

- Technical complexity masquerading as inevitability

- Narrative over fundamentals (“This changes everything!”)

- FOMO investing from the same VCs who pivoted from blockchain to AI

- Real utility mixed with speculative excess

- Solutions in search of problems

UK railways and canals weren’t grand infrastructure plans—they emerged piecemeal in response to urgent demand for moving coal, cotton, and manufactured goods. Each route solved immediate transportation bottlenecks.

The few examples of top-down “systematic infrastructure”—like Scotland’s Caledonian Canal—were expensive failures that never found sufficient traffic to justify their cost. Scotland’s Caledonian Canal lost money for a century, covering operating costs but dominated by the capital write-off (lesson noted); AI clusters abandoned after the 2026 GPU refresh will lose money faster, but the write-down clock is measured in quarters, not centuries.

The Real Problem: Optimising Non-Bottlenecks

Recent data from McKinsey, BCG, and others shows the pattern clearly: 78% of firms use GenAI, but only 11% report any EBIT impact. Self-reported McKinsey survey (n=1700, not weighted by sector) but likely directionally correct. Most say “too early to tell”—but that’s exactly what you’d expect when optimising non-constraints.

A BCG study of 758 consultants found AI delivered 25% speed gains and 12% quality improvements. Result? Zero change in billable hours per project. Clients just expected faster delivery for the same fee. The productivity gains were real but irrelevant.

The 5% of pilots that survive aren’t using better playbooks; they simply landed on a step people already curse daily, so an army of unpaid beta-testers keeps debugging the model at 2 AM. The rest die because no one’s forced to make them actually work.

Think drug discovery: AI can accelerate compound identification, but 90% of the time and cost is in physical trials, manufacturing, regulatory approval. Speed up the search 10x and you might improve overall timelines by 5%.

Like blockchain applications, most AI pilots are technically impressive but don’t solve actual constraints in existing systems. They optimise for demo-ability rather than practical utility.

The knowledge that turns a demo into infrastructure accretes during the daily grind of use, not by extra PhDs. Every day the tool stays in live contact with real work, it accretes another layer of tacit know-how. Park it in a pilot sandbox and the accretion stops.

The Incentives Keep This Going

The same VCs and entrepreneurs—Andreessen Horowitz first among them—who promised that blockchain would revolutionise supply chains, finance, and governance have seamlessly pivoted to AI transformation. The playbook is identical: pump massive valuations on disruption narratives, pass the bag to public markets, let someone else worry about whether the technology actually solves the problems it claims to address.

To be fair, hyperscaler capex is building permanent compute infrastructure (data centres & GPUs) that will outlast the application bubble. That’s the “railroad” that remains after the speculative companies die. But hyperscalers are guiding to over $200B in global AI capex for 2025. If that spend doesn’t flow through to EBIT by 2026, they’ll claw it back with 15-20% price hikes on the vanilla compute you do use. The bubble tax is coming whether you buy a GPU or not.

The Smart Move

Before you green-light the next AI budget: Ask the front-line team: “What broken step makes you miss dinner?” Map the end-to-end process, find the actual bottleneck with the highest work-in-progress pile-up, and run a shadow AI assist there for two weeks only.

If mean cycle time doesn’t improve at statistical significance, kill the pilot.

Railroads paid off—just not on the timetable the 1840s prospectuses promised. AI will likely do the same: twenty years from now some descendant of today’s transformer stack will sit inside most white-collar workflows the way steel rails still sit under freight cars.

The question isn’t whether AI will become infrastructure—it will. The question is whether you’re funding the backbone of tomorrow’s workflows, or underwriting the next abandoned canal.